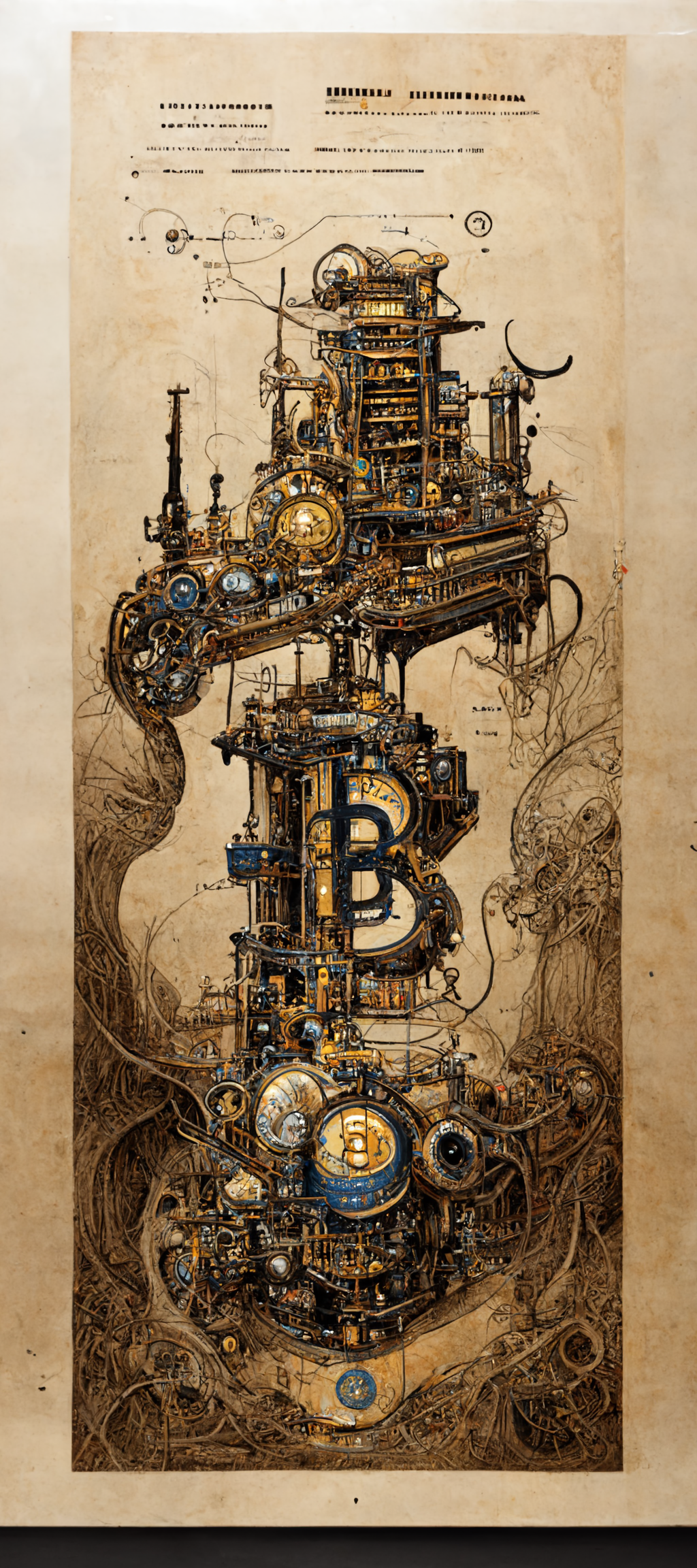

#3 - Brute forcing creativity

The image attached to this newsletter is created by Artificial Intelligence. After I gave the instructions (the prompt), it generated four pictures, one of which led to the final one you are looking at. These new AI tools make it possible to endlessly iterate, make minor tweaks and try out new ideas. It took me a day of tinkering to generate multiple images like this. Image the time it would take a real artist. The images generated by AI are not better, more beautiful, or more creative than artwork generated by an actual human being. Quite the contrary, I found that AI art is filled with weird artifacts. It is the speed that is revolutionary. Trying out ideas and reiterating until the image was satisfactory was quite a thrilling experience. There is no definition for this process yet, so I call it: brute forcing creativity.

What I have been thinking.

At the beginning of June, Twitter was flooded with collages of pictures. Each collage was accompanied by a cryptic script, for example, "medieval painting of the wi-fi not working," "A still of Homer Simpson in Psycho (1960)," or "Spider-Man from Ancient Rome." These images are generated by Artificial Intelligence, in this case, Dall-E 2. The user writes what he wants to see, and the AI generates a realistic image based on the input. I initially refused to partake in the hysterical excitement surrounding the (closed) beta launch of Dall-E. It reminds me of Clubhouse, the app that took tech-twitter by storm during COVID-19: everybody that got access made it sound like the best thing ever, while most people were watching from the sidelines, waiting for access. I don't like watching from the sidelines, so I concluded that Dall-E 2 sucks. All the images had weird artifacts anyway, I said to myself, trying to curb my jealousy. It will probably fade away soon.

It didn't fade away soon.

If anything, my Twitter feed filled itself with even more AI-generated pictures. However, the (not so) funny Dall-E memes were replaced with lush anime landscapes, impressive portraits, beautiful paintings, and modern architecture. Check out this feed of the Midjourney software. I saw more and more artists posting Youtube videos where they tried out generating art for their channel using one of these AI systems. Yes, systems. Dall-E isn't the only tool you can use to generate AI images, there are two competitors at this point (and probably more in the future), and the beauty is that you can try out both. Midjourney is the first one; you can check it out here.

The onboarding is easy; you join the discord server and follow the FAQ. After the free trial, you need to get a subscription. I chose the 30-dollar monthly subscription, which I canceled immediately. The other AI system I tried was Stablediffusion, which launched a web interface last week. You can try it here. There are no subscriptions here, but you pay per image (around $12 per 1000 images). I like the subscription model of Midjourney better since it gives me unlimited images. I learned that AI art is mainly trial and error, but I will return to that. Armed with a subscription and a bag of credits, I set foot into the land of AI.

First steps

Flash forward 48 hours. Damn. This AI stuff is addictive. I started with Stablediffusion but quickly burned through my credits, so I switched to Midjourney. That is one of the first things I've noticed: you will iterate, revisit and fine-tune your prompt many times. This means getting an unlimited plan instead of credits is better. Maybe this will change in the future when the software gets better at understanding our prompts and when it becomes easier to tweak small parts of the image without having to start over.

Another thing I've noticed: writing prompts (the natural language input in which you define what you want the AI to create) is hard. I mean: really hard. After the honeymoon phase (writing "funny prompts"), I tried to develop something interesting. After ten minutes, I came up with:

Beautiful landscape with big trees

The result was not impressive. It wasn't even close to the art I saw online. I was thinking about this when I was taking a long walk today: why is it so hard to put my thoughts into words? Why is it hard to have creative ideas to begin with? Probably because we don't give our creative thoughts enough space. Do you give your brain time to process everything you see, feel and hear? What doesn't help is that the better you describe the image, the better the output will be. The bigger your vocabulary, the better the picture. As you might know, I'm Dutch. That means I write big green tree when I mean lush, overgrown forest.

Then I discovered the feed on the Midjourney website. This page shows all the (trending) artwork, including the prompt used to generate it. I copied the prompts that I liked and started to tweak them. There are a few exciting things I would like to share with you. Firstly, you must include the specific style you want the image to be. For instance, I really like Zelda games. One prompt often included is "breath of the wild style." This returns an anime-like image. Other things to include in your prompt: camera angle (portrait or wide angle shot), art style (hyper-realistic or anime), and different historical eras (baroque, victorian, 15th century). Secondly, you must be specific about the feeling you want the image to exhibit. Instead of a mountain town, write a curious little village with cascading wooden cottages. Try an abandoned alien spaceship on a rocky moon-like planet instead of an alien spaceship on the moon. The last thing that I noticed is that most people use an image prompt (existing image) to give the AI something to work with. I don't know how I feel about that; it takes a bit of the magic away. I see this becoming a no-go in future AI-art communities like old-school DJs still use vinyl.

The first few times I copied someone else's prompt was fun. However, it got old quickly. I sat down with my notebook and thought about my favorite things. There are a few doors that are opened by these AI tools that would typically stay closed for me. The first thing is that I can expand the worlds that I love. These tools know the artists' art styles who made the games, movies, and TV shows I love. This will probably lead to some copyright infringement debate in the future. The next thing is that you can easily combine these different styles and subjects into your ultimate dream world. Game of Thrones, but in Breath of the Wild style? Done. Indian temples on the moon in 80s science fiction style? You got it. Dystopian cyberpunk bookstore in Cowboy Bebop style? Not a problem. Combine this with the unlimited iterations (generating new images with the same prompt), and we are getting into this is revolutionary territory. AI image generation tools allow us to brute force creativity.

Limitations

So Bart, two days ago you hated Dall-E 2, and now you sound like the biggest fanboy ever. Did the AI get to your head or something? Yes, they did. I got...—330**^^we!#are+_cpming<<fr||"You

Excuse me; my computer started to act up. Weird. Where was I? Ah, the revolutionary part. There are some limitations to these tools:

• They hate text. All text visible on your images is gibberish and doesn't make any sense.

• There are always some artifacts present in your images. Although not as apparent as in the text, you can still easily spot them. Especially faces are a bit weird sometimes. My best explanation is that the images look fantastic when they are small, but when you enlarge them, you will see some strange and inexplicable parts in your image.

• It is challenging to change small parts of your image. I made a fantastic ancient bitcoin machine, but one part of the image was filled with artifacts. If I want to use this image professionally, I should be able to clean up parts of the image (or at least export it as a photoshop file).

• It is almost impossible to make a portfolio based on the image you like. Let me explain: say you want to make a comic book based on a really cool image you have generated. That isn't easy right now.

I think that all these issues can be resolved in the coming years, and maybe even sooner. Midjourney alone received multiple updates last week.

Preliminary conclusion

I have a lot of fun. After the difficult first day, I got into the flow on the second day. Blending my favorite TV series, games, art styles, and anime artists led to great results. The thing that got my blood flowing was the community starting to gather around the AI fire. This AI-generated sci-fi film, this comic book, or this game where AI-monsters fight in a GPT-3 (this is for another blog) narrated arena.

So, what did I create? I will share the best ones (including the exact prompts, so you can try them out yourself).

What I have been doing.

• I stopped reading Soul in the Game. Not because it is a bad book, but because it isn't a book at all. It is a collection of articles that are fun to read on their own. But after reading an article in which the author introduces his 14-year-old son for the umpteenth time, I decided it was time to move on to the next book.

• The next book is Children of Ruin, the second book in the Children of Time novels. I quite liked the first book, and so far, this book doesn't disappoint.

• My next book will probably be something more fantasy and a little bit less sci-fi. Maybe I should read Fire or Blood. Or watch the series, just like everybody else.

• I told you to remove TikTok.

• I don't know how I found this tweet, but it has become my go-to answer to everyone on Telegram.

• This video (once again) showed me what a powerhouse Davinci Resolve 18 is and that we should start planning the funeral of Premiere Pro.

• I completely watched this video explaining all the tricks used in WWE matches, and I don't regret a second of it.

• The original samples to one of the internet's biggest meme songs were found. I honestly think it is one of the best hip-hop instrumentals ever.

Close

Thank you for reading; I appreciate it. Please use the comment section to post what you think about these AI tools. If you have any other questions or feedback, feel free to drop them in the same comment section.

If you like the newsletter, please forward this edition to one of your friends, colleagues, or family members who may also like the newsletter. Some call this a pyramid scheme; I call it sharing the joy. Until next week,

Bart

Member discussion